"If I am on a computer by myself and sometimes it's barely adequate...how can it possibly work having hundreds of people sharing a server?"

We hear this over and over again as we talk to others and explain our technology. I think they assume that you divide the CPU speed by number of users and that somehow gives you your time slice.

In fact, once people see the speed of the software running at Largo, they are amazed and often say it runs faster than it does on their PCs. There are a few reasons for this:

* The servers are obviously bigger than any computer you would have at your desk.

* Even if you have hundreds of people on, at any given time only a fraction of that total is actually using the software.

* When you have enough memory to hold everyone in RAM, there is no swapping at all.

* You never really have a cold start of software. It's always running by someone else in the City.

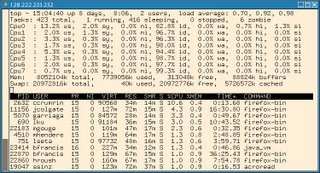

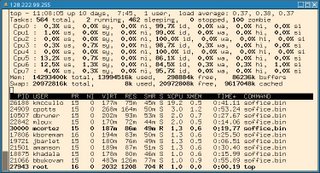

I thought I would show a few 'tops' to demonstrate how well Linux handles multiple users. I think we could easily get 2-3 times more people on these servers if we had to do so. The most interesting thing to note is the CPU usage.

This shot is OpenOffice 2.0.3, 85 concurrent users at the time.

This shot is Evolution 2.6, 180 concurrent users at the time:

This shot is Firefox 1.5, 48 concurrent users at the time: