I have had the pleasure of meeting Nat and Harish in person, and they were given a quick tour of our network and design. Since I have contact with so many more of you, I thought I would explain our design a bit and how it relates to GNOME and software.

There are 3 basic designs for deploying software to users, Largo has deployed Application Servers.

Desktop ComputerIn this design a physical computer is sitting at the desk of each user, each loaded with operating system and software. This is by far the most expensive, and support intensive way to deploy. Technology churn forces upgrades and changes often, and patches need to be applied to each machine. Even when pushed from a central server, it's support intensive.

Departmental Servers In this design, groups of users log in with thin clients. It's very similar to a desktop computer in that most of your software is located on one server, except that multiple people are logging into the same computer. The problem with this design is that similar packages are still on multiple departmental computers and require upgrades. It's also sometimes difficult to have multiple software packages on one machine and be able to upgrade them without library upgrades and patches that cause problems with other software. You also sometimes have problems where a user might lock up a package or NFS mount which forces a reboot at the expense of kicking everyone off the server.

Application ServersIn this design, each unique major application is given a server. This allows you to select the best operating system to deploy, and upgrades can be installed without worrying about other things failing. Upgrades are very simple, you install the new version and then just change the launch script and point to the new version. As people request this package, the new version just goes live instantly until the old one is no longer used.

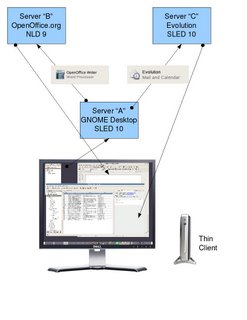

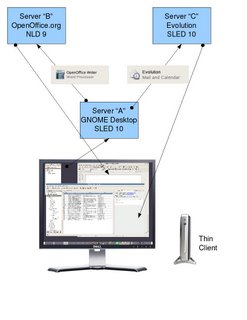

In our case, we have a big server running GNOME. All that runs on this server is the desktop itself and this allows users to customize their desktop. The basic tasks like fonts, wallpapers, colors and themes are configured on this machine. When a user requests an application like Evolution, a signal is sent to another server which is running only that program and it launches a session and then remote displays it back to the end user. From a users perspective, they cannot tell that they are logging into multiple servers at once, as it's fully automated.

Because the horsepower comes from the servers, the user thin client devices have a long duty cycle. Our current devices were deployed in 1997 and are still working fine. They are being retired because of certain features that have become standard since 10 years ago: the RENDER extension is missing on them, they only support 1024x768 and they do not have scroll wheels. This fall we are installing new thin clients which will once again have a 10 year duty cycle. A 500 dollar thin client therefore will have a 50 dollar per year cost per user.

I have created a simplified image of our design. The user logs into the GNOME server with a thin client. Once there, as they click icons a session is formed on another server and then sent back to the users device. This allows you to scale easily to hundreds of users on the same server and support is lowered greatly. We have been running this design for 10 years and it's the most stable environment I have ever seen.